Spotify Canvas

-

AI Music Platform

The current music experience, though personal, lacked a soundtrack that evolved with each listener. To address this creative fragmentation, Spotify Canvas was conceived: an AI-powered music creation platform transforming listening into co-creation. Through a meticulous design fiction process, an intuitive interface was conceptualized generating complete songs, with personalized lyrics and album art tailored to listener taste. The fact this function exists in a fictional world, is a seed, a narrative object; thus, it provokes deep reflection on AI, music, creativity, opening topics for near or distant futures.

Where it went wrong

In the era of digital music, millions of listeners enjoy playlists and recommendations, yet a silent question lingered: what if music was created for you and by you, without limits? The reality of current platforms, though advanced, left a gap. There was no way for music to evolve with them, truly tailored to their preferences.

Existing AI tools generated text or images, and even music, but lacked the ability to orchestrate a complete song, with lyrics and album art, based solely on individual listener tastes. This creative fragmentation meant that the musical experience, while personal, wasn’t unique in its essence. Users were missing out on the possibility of having a soundtrack that truly represented them.

How it was fixed

To address this gap in personalized musical experience, the answer was forged through a meticulous Design Fiction process. It began by observing the faint signals of AI in content creation (text, images, music), extrapolating a future where AI would generate music without prompts, based solely on listener tastes. This culminated in the key question: What if Spotify created complete AI songs, personalized for each listener?

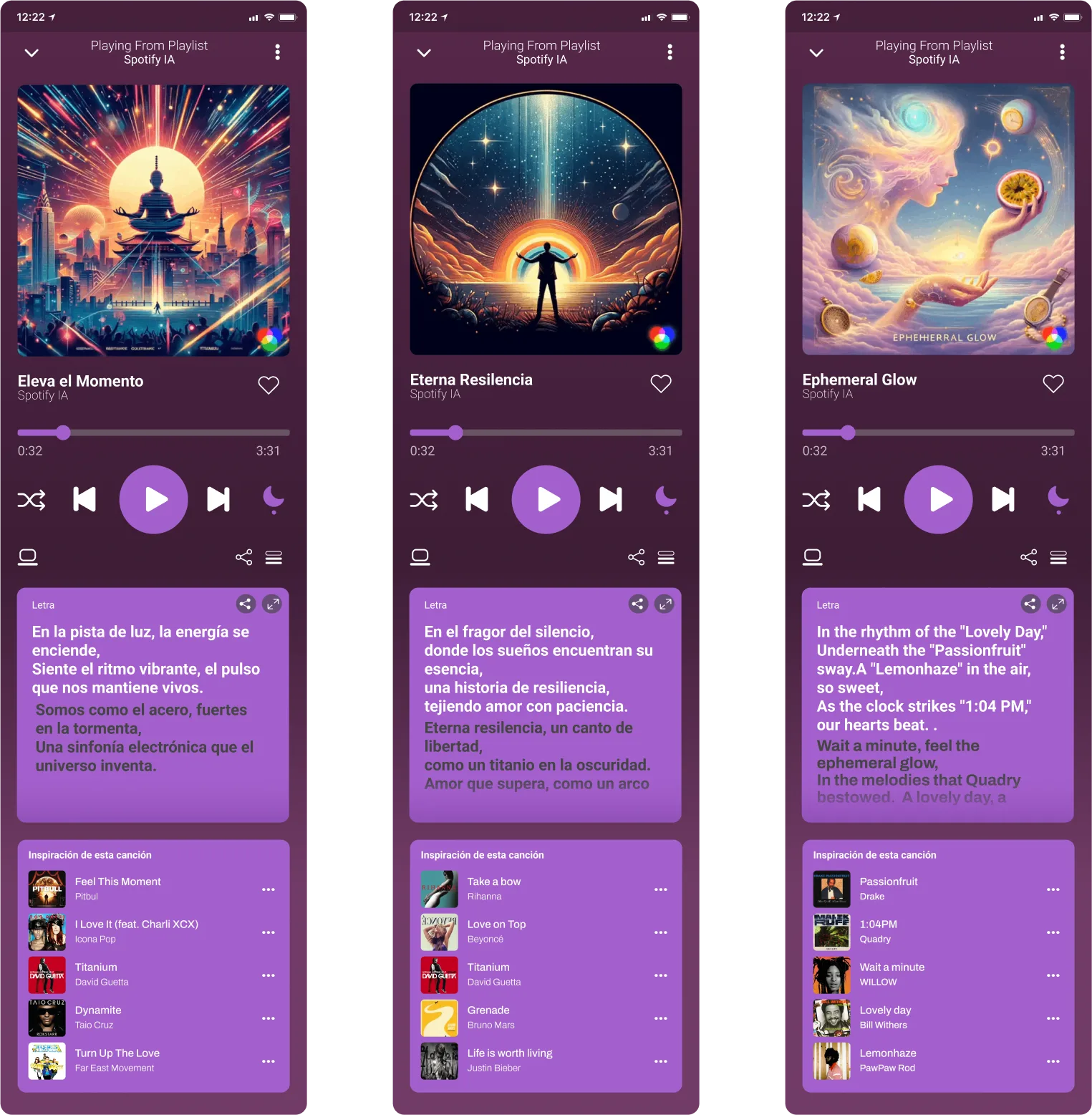

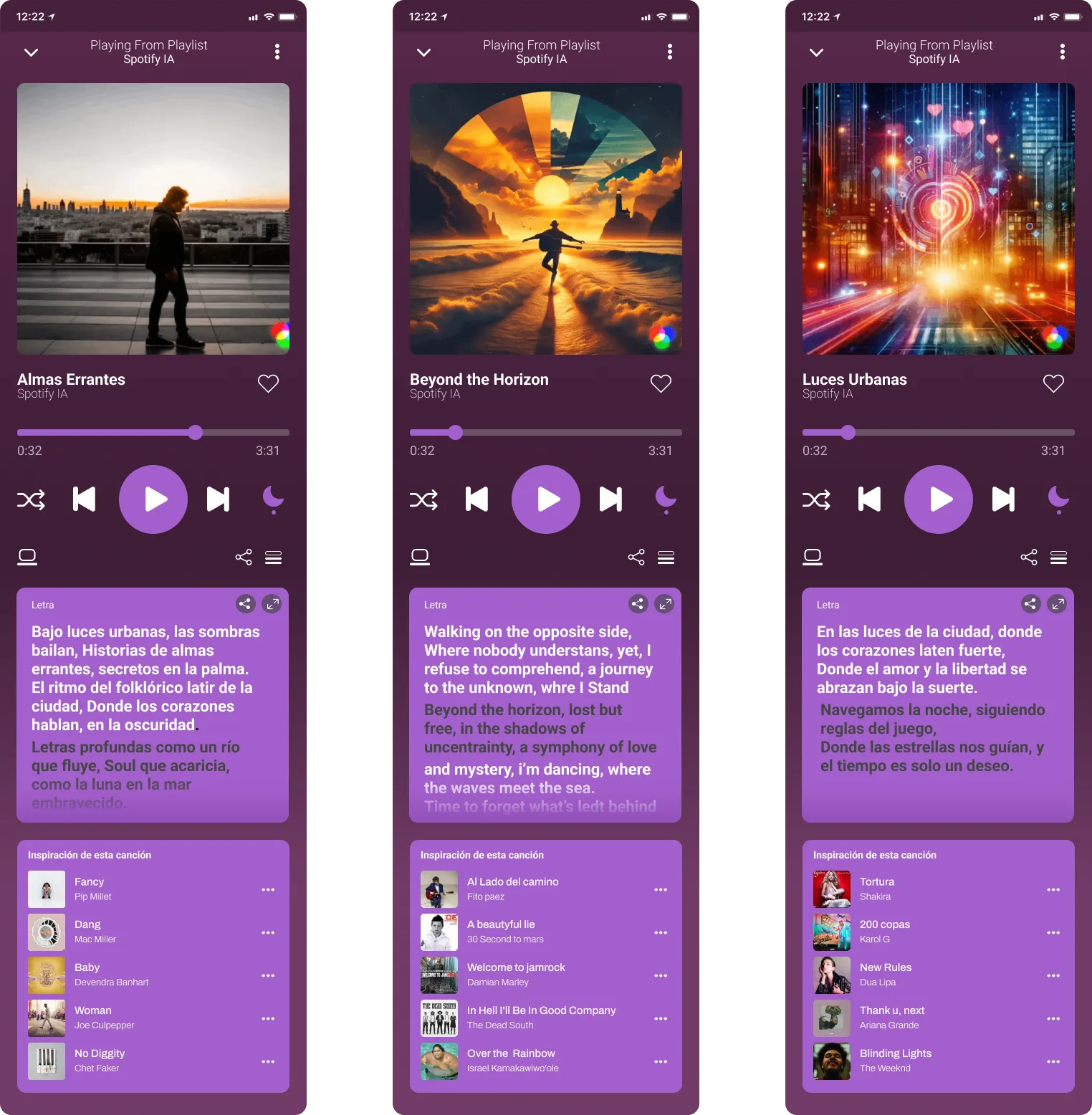

With this vision, the ‘thing’ was materialized: a diegetic prototype of a Spotify interface featuring this AI-generated music function. AI prompts were collaboratively defined, using data from base songs to generate music, lyrics, and album covers. This prototype, with its intuitive onboarding and sound-integrated interactions, not only showcased a possible future but, when disseminated to a UX/UI audience, sparked critical debate about creativity, personalization, and the implications of AI in music, inviting reflection on future solutions.

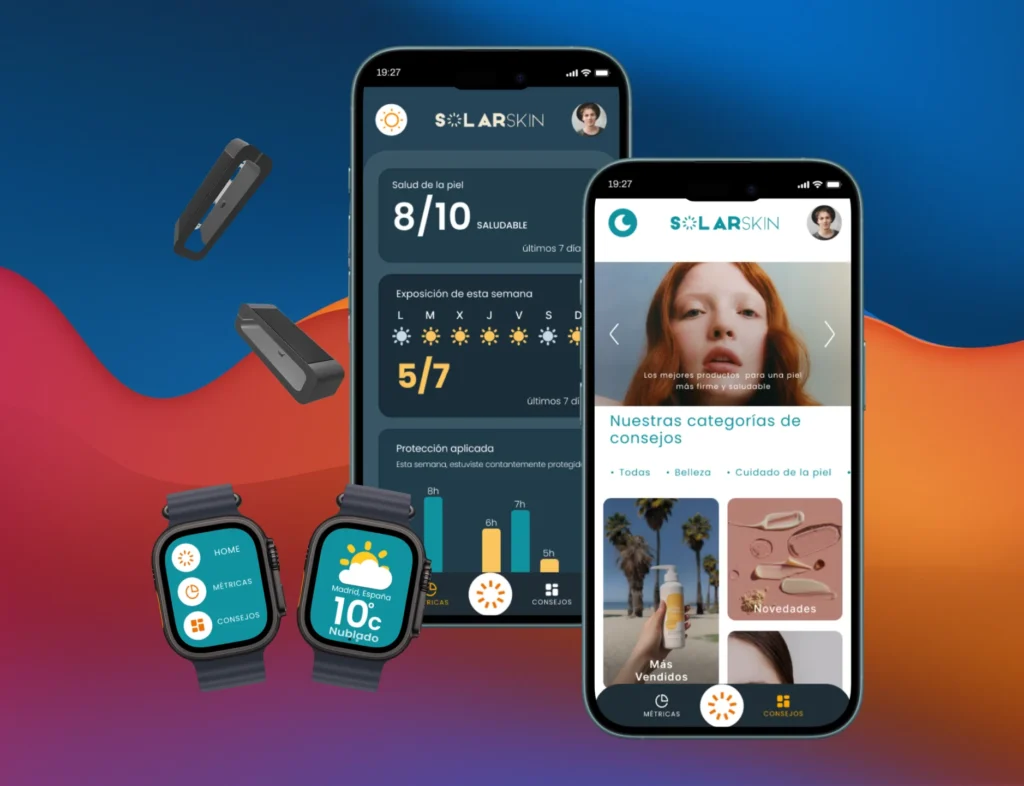

Core Features

Personal Music

AI creates complete songs. It's based on the listener's musical tastes and habits, offering a unique experience.

Unique Lyrics

AI generates original lyrics. It adapts to the lyrical style of the user's favorite songs.

AI Covers

Album cover is AI-generated. It's a visual blend from the user's base songs.

AI to Your Taste

Generation feeds on genre, tempo, reproductions, and likes. A tailored musical experience is ensured.

Spotify Integration

The feature integrates directly into the Spotify interface. It presents a possible future of AI music creation.

Living Soundtrack

A unique soundtrack is created that evolves. It constantly reflects and grows with the user's tastes.Hands-On Desing Fiction

I am a heading

Collect Faint Signals

To begin this journey into the musical future, a close observation of the current world was undertaken around November 2024. At that time, although AI didn’t possess today’s capabilities, an accelerated evolution was already evident. A faint yet potent signal was detected: artificial intelligence was already not only producing text (as with ChatGPT or Bard), but also generating images (e.g., Midjourney, DALL-E) and music (e.g., Amper Music, AIVA). This observation confirmed a clear trend: AI was destined to achieve much more in the future, opening a vast field for speculation.

Select Arquetyype

Following the exploration of faint signals, the next phase focused on defining the archetype that would shape our speculation. It was understood that the archetype, in this context, needed to be a tangible object or a specific application function. For the project, the function of an application was chosen as the central archetype. This decision allowed the design fiction to focus on how a particular feature of an existing platform could be radically transformed by AI, paving a clear path for solution conceptualization.

Present Stimulus Materials

In this phase, the focus shifted to nurturing imagination and contextualizing the musical future. To do so, stimulus materials were presented in the form of existing applications that, although they didn’t create complete AI-generated songs, performed similar or inspiring functions in the realm of music creation. This exercise allowed for expanding creative thinking and visualizing how current technology could scale or combine to achieve the vision of AI-generated music.

Extrapolate From Your Signals

After analyzing the growing capabilities of AI to generate content, including images (e.g., Midjourney, DALL-E) and music (e.g., Amper Music, AIVA), possible musical futures were extrapolated. The most fascinating signal indicated that, perhaps, in the near future, not even a prompt would be needed to create a song or its album art. A scenario was envisioned where, based on the user’s existing musical tastes in any application (like songs they ‘like’ or their playback history), AI could generate much more personalized tracks. This would include a rhythm, lyrics, duration, and music type tailored to each listener, with melodies that resonate deeply, specific instrumentation, and even the possibility of real-time collaboration with the user to co-create their ideal soundtrack.

Indetify What If

Following the extrapolation of signals about the future of AI-generated music, the next step was to crystallize that vision into a specific and powerful question. To do so, existing applications were evaluated and selected, seeking a starting point to propose a more realistic and credible future. The key question posed, which encapsulates the project’s essence, was: What if Spotify generated complete songs with AI based on its listeners’ musical tastes? This formulation allowed the design fiction to focus on a tangible and user-relevant scenario.

Know Yopur Tropes

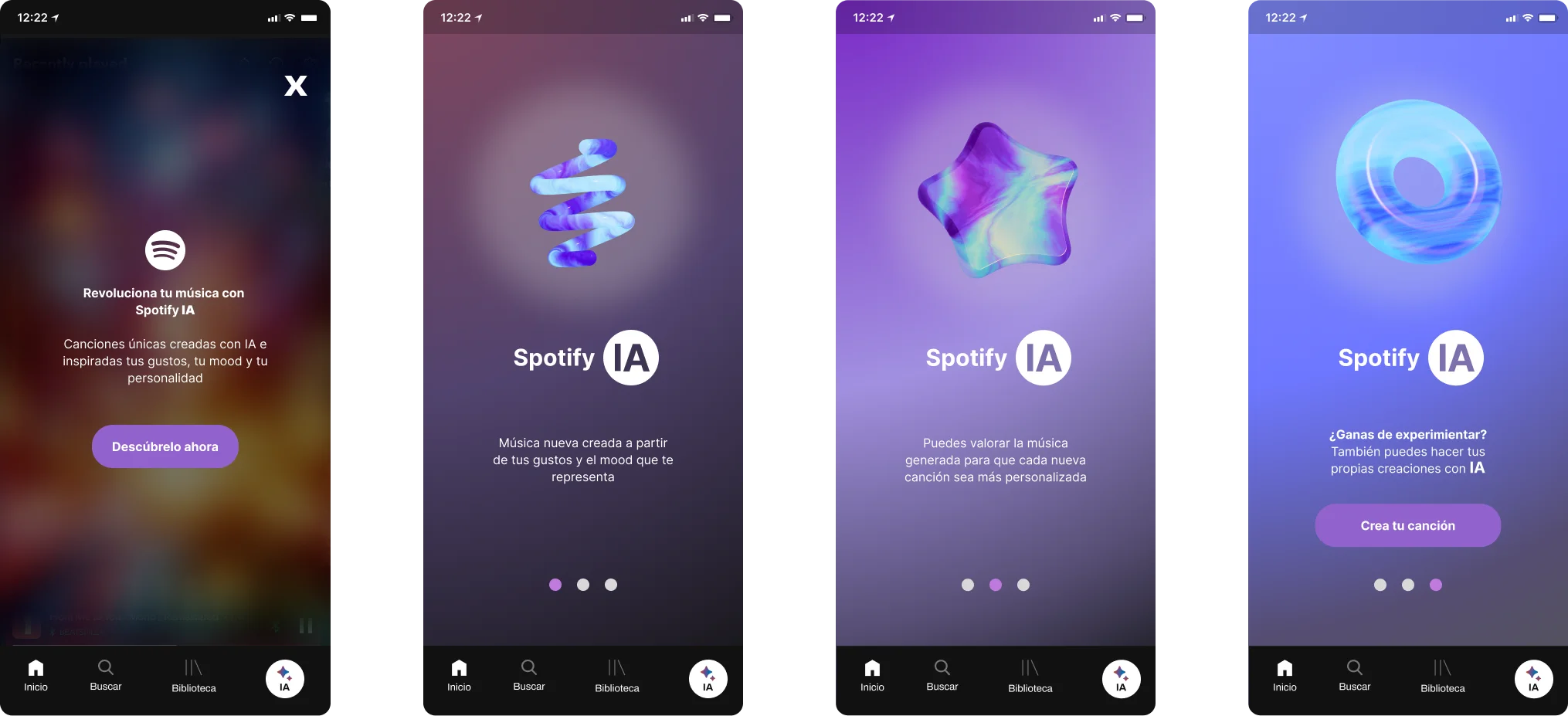

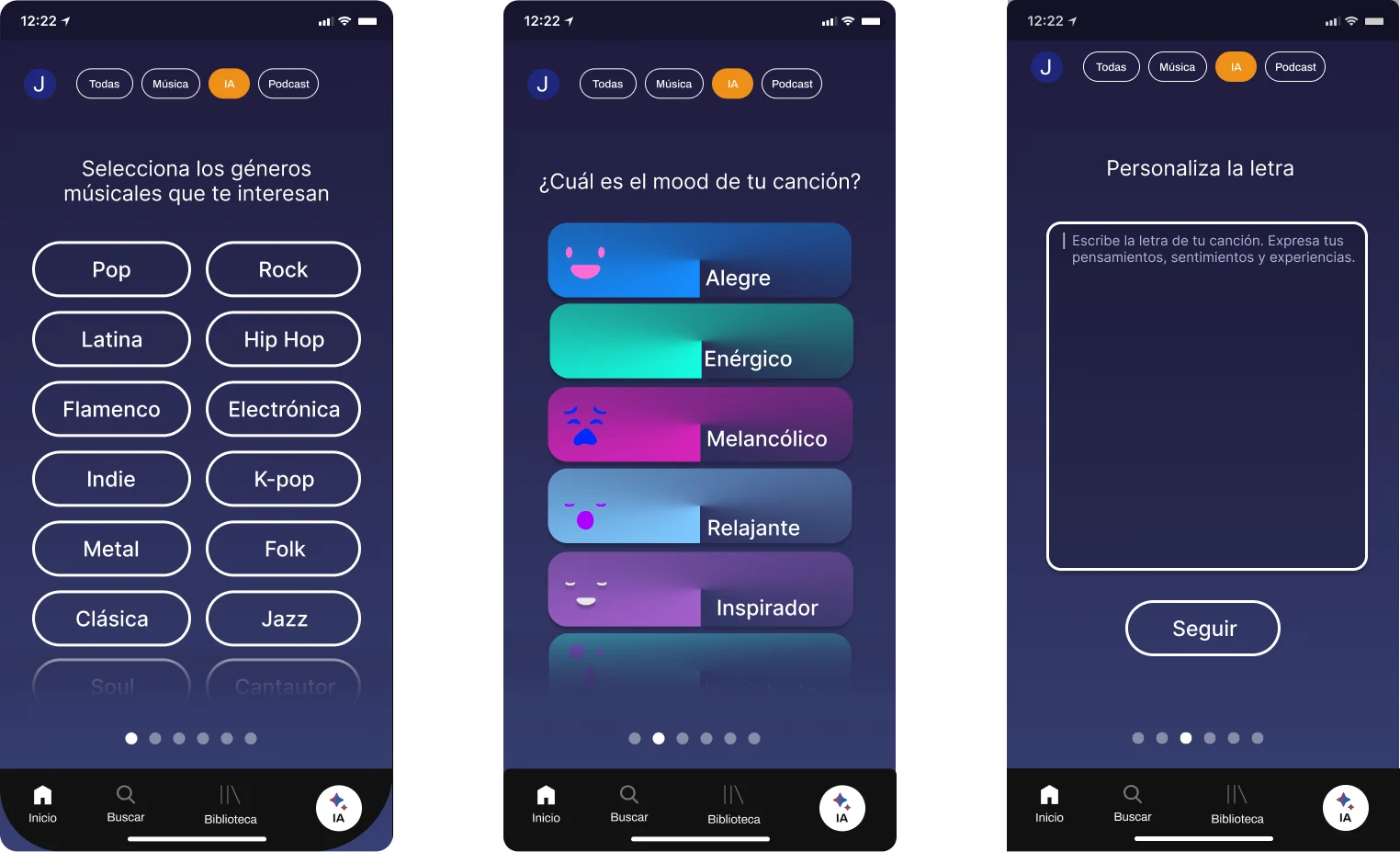

To make the selected application function archetype convincing, it was crucial to thoroughly understand its ‘tropes’. This meant identifying the essential characteristics that would compose it, similar to how a cookie box always has its nutritional information. For this case, it was indispensable that the AI-generated song function included key elements like lyrics and a recognizable album cover. Furthermore, to ensure the function was easily adopted and understood by users, it was considered vital that its recognizability was immediate, supported by an intuitive onboarding process. Thus, an experience was built that, even being from the future, would feel familiar and accessible.

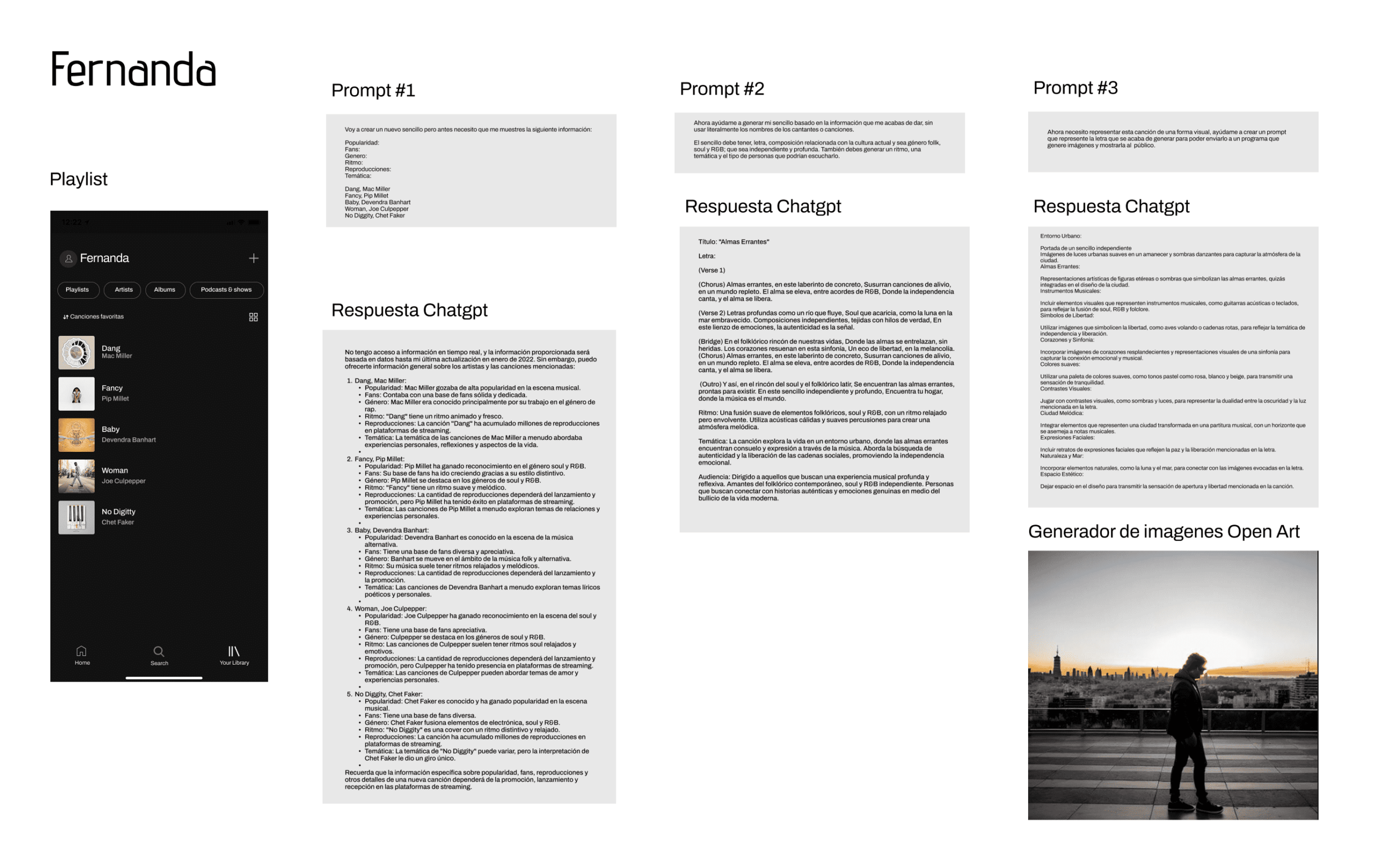

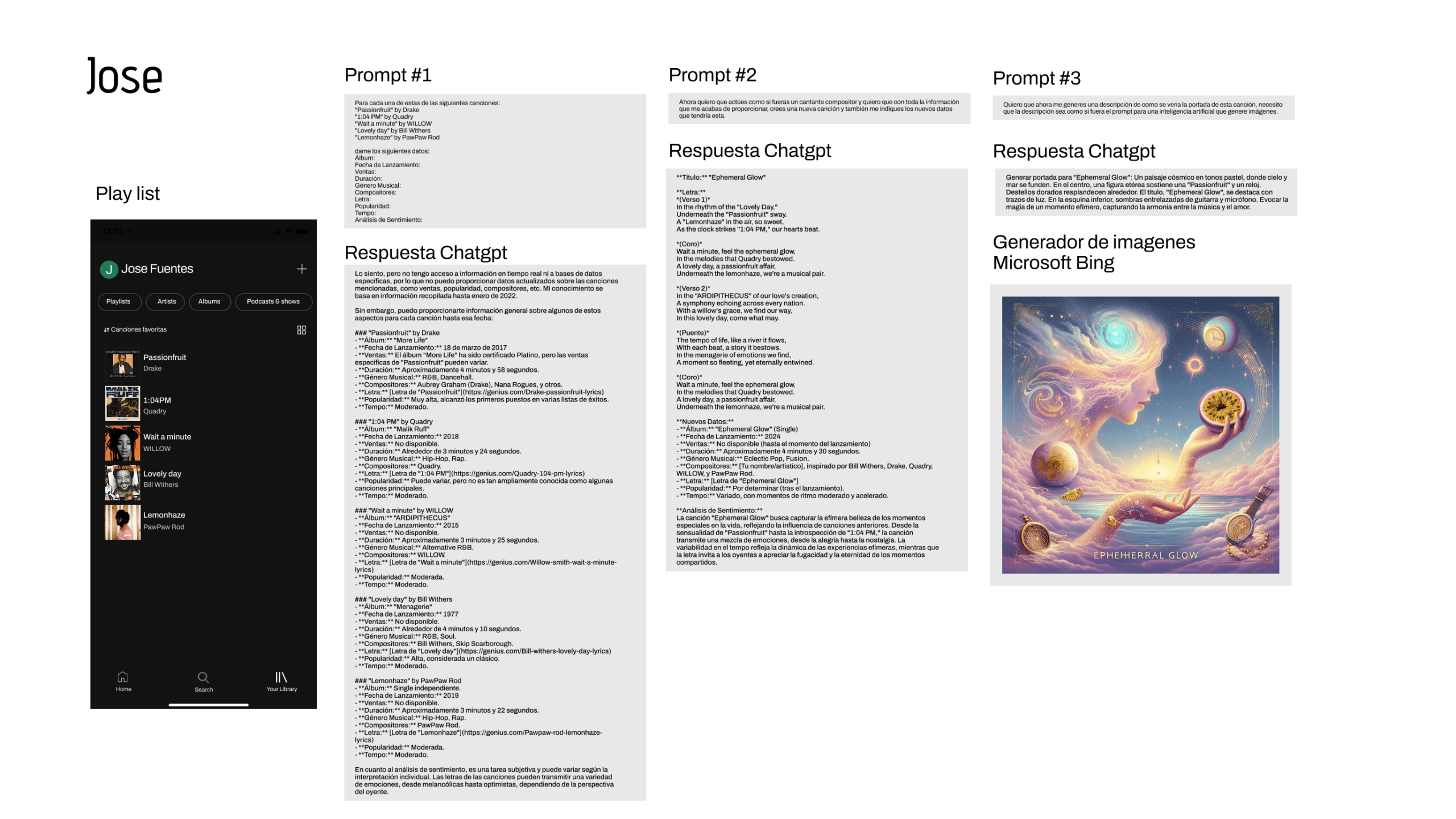

Design Workshoop

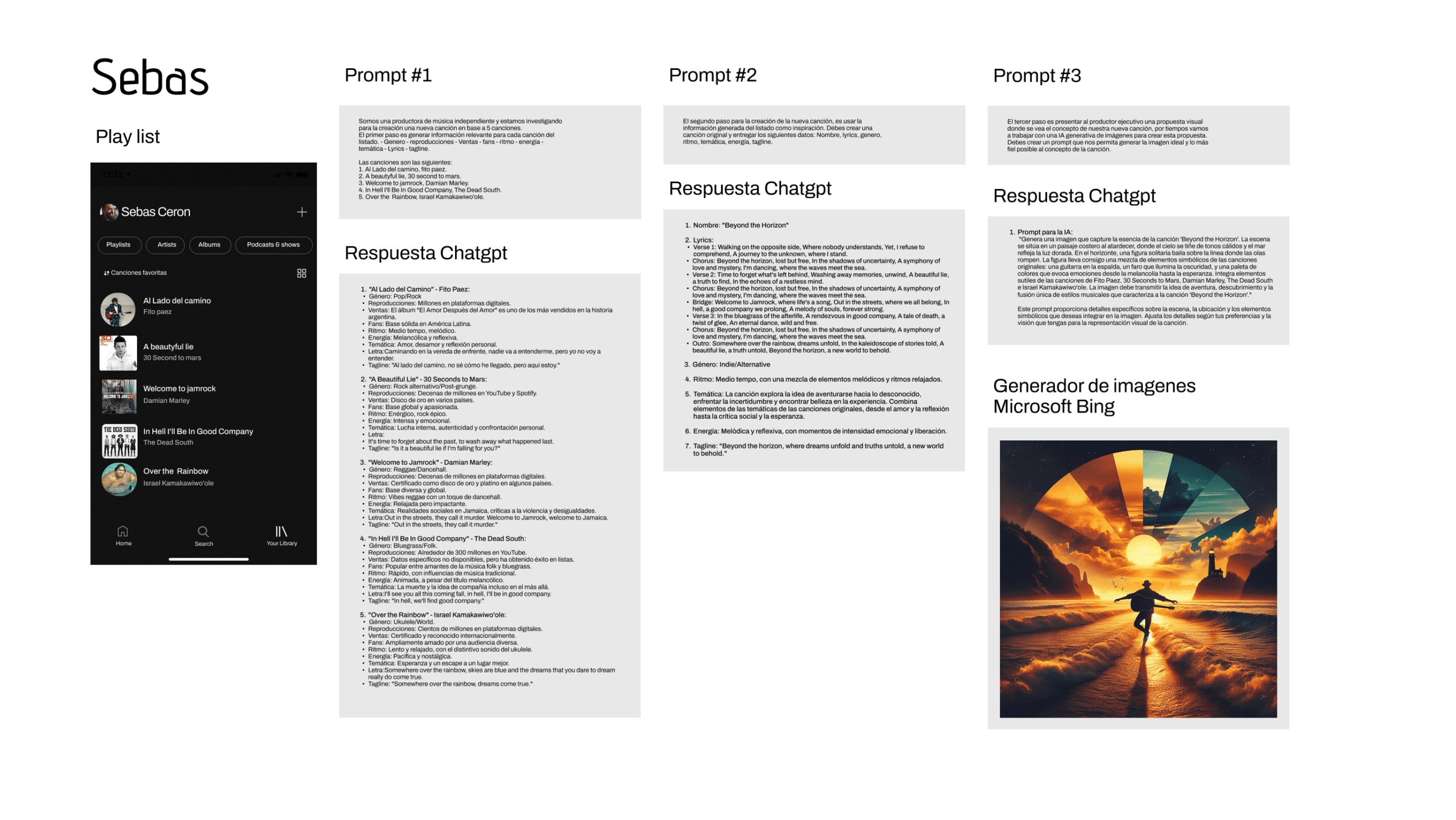

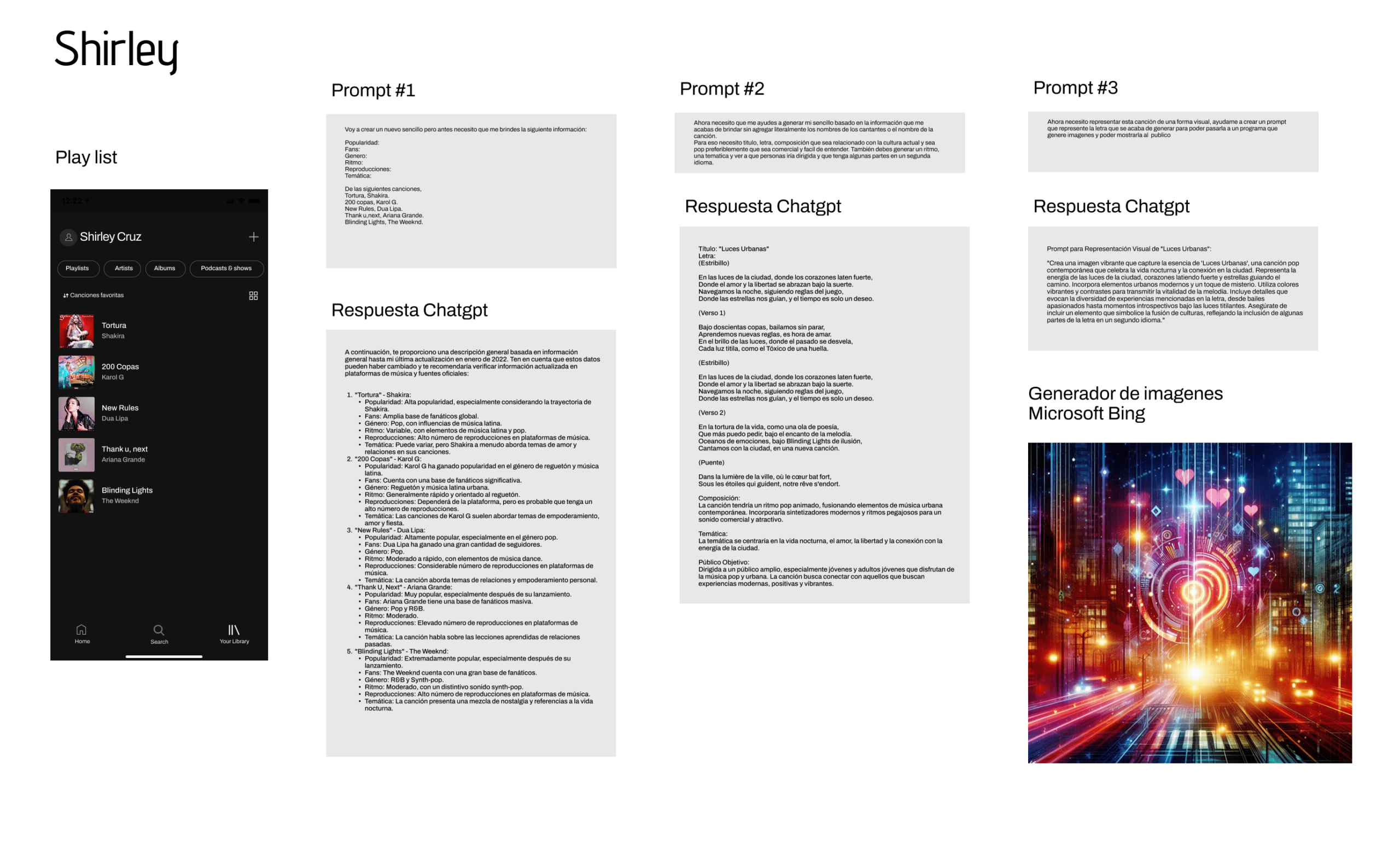

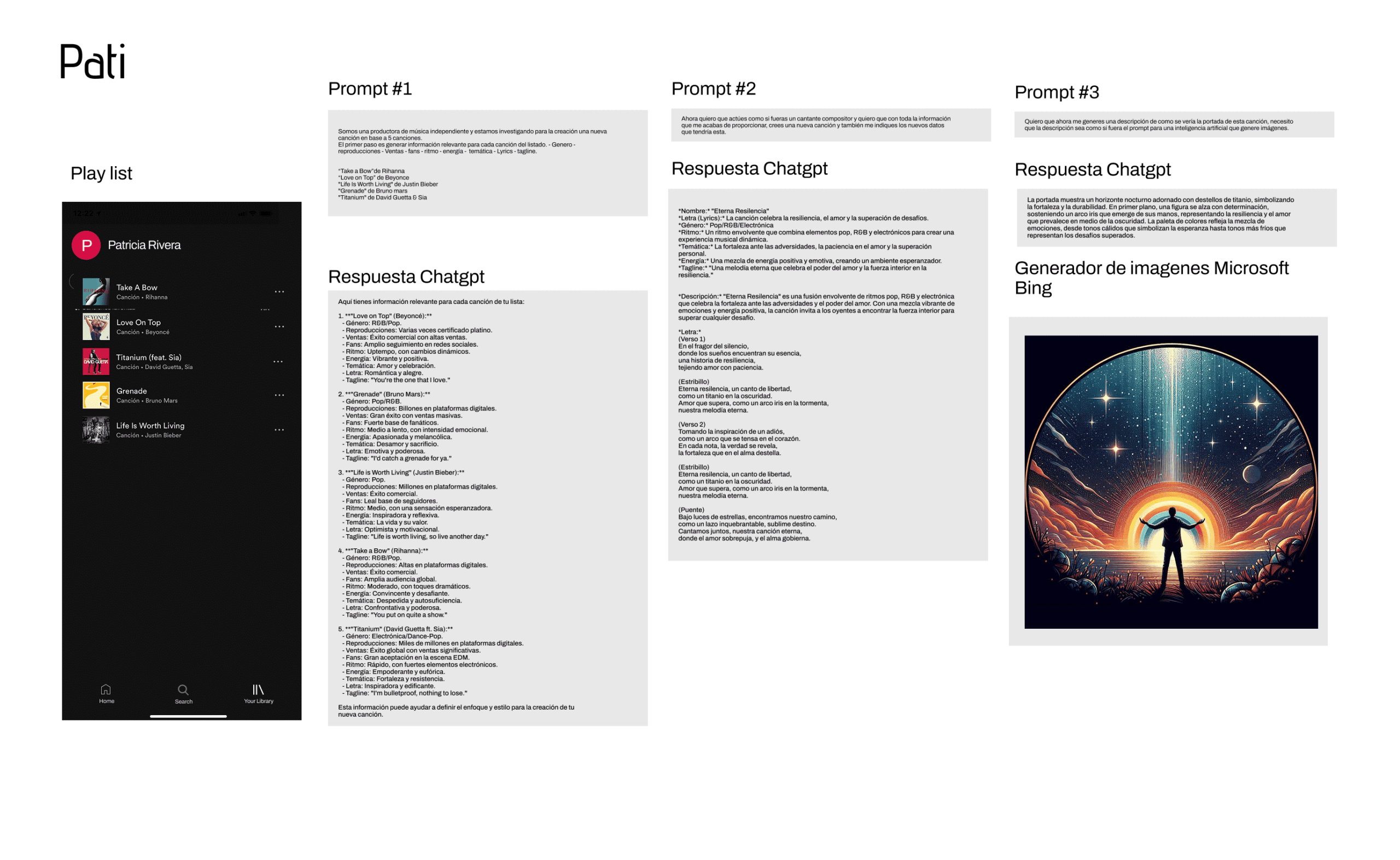

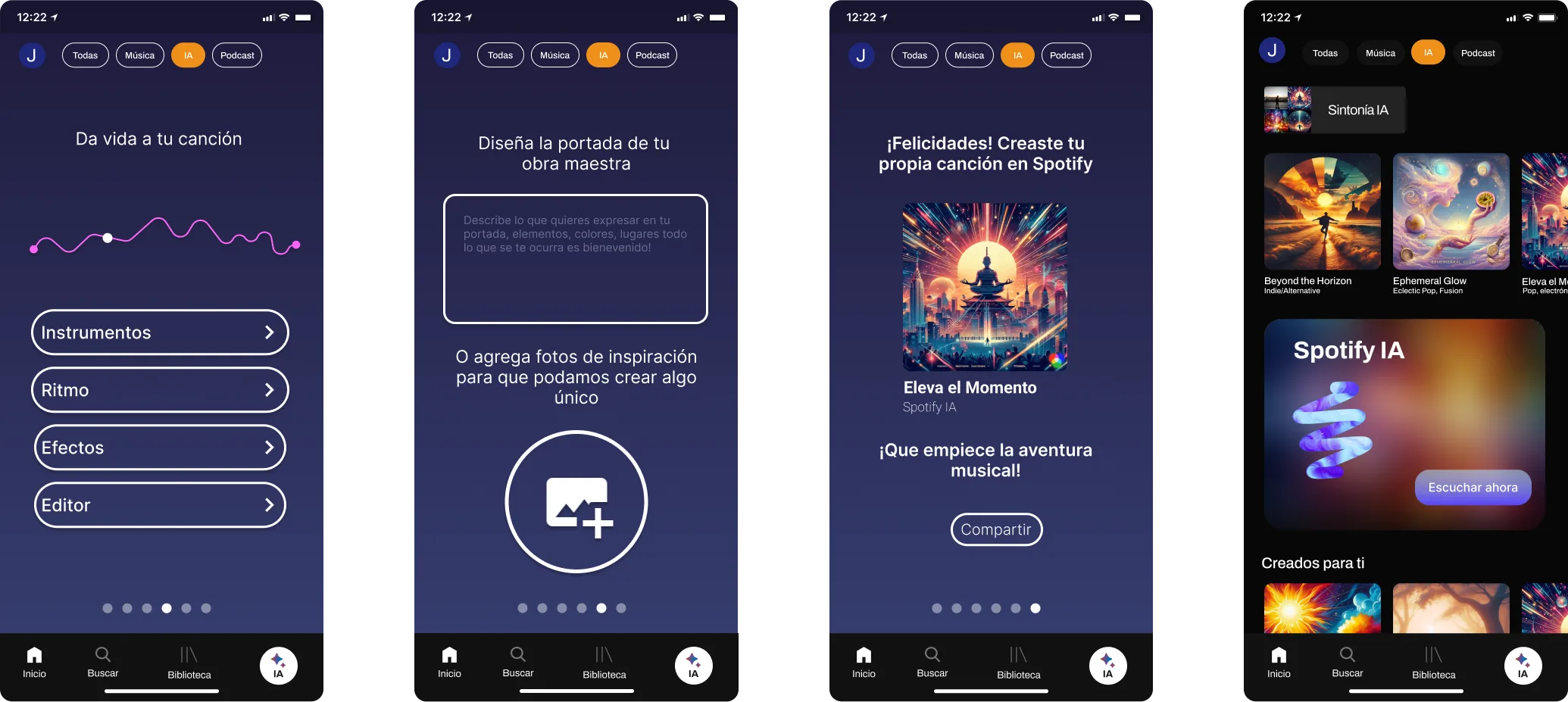

To consolidate all ideas in a manipulable space, a collaborative design workshop was organized. In this session, the AI prompting strategy was defined: the data from five existing songs would be used as a base and example (as the idea was for the AI to generate music based on user tastes), along with detailed characteristics (such as title, duration, rhythm, and genre), for the AI to generate a new song. These attributes were then combined to inspire the title and lyrics for the new track. Finally, a prompt was generated for another AI to create the album cover, including associated images and videos, all managed in Figma to visualize and prototype the initial interface ideas.

Make The Thing

With all song details ready –lyrics, title, and album cover images–, and the idea consolidated, it was time to shape the diegetic prototype. In this phase, the design of the Spotify interface incorporating the AI-generated music function was undertaken. This included creating an intuitive onboarding experience to guide the user through the new feature. The process culminated in an interactive simulation that not only displayed app interactions but also integrated the sounds of the generated songs, making the vision of the musical future even more real and immersive.

Disseminate

With the diegetic prototype now ready, the next crucial step was to share and disseminate the vision of the musical future. The Figma link to the prototype was made public, allowing direct access to the experience. Additionally, the project was exhibited to UX/UI master’s students, with the primary goal of generating debate and new ideas. Questions sought to be provoked included: Would the existence of this feature be a good idea? Who would use it? Would it be better on Spotify or Apple Music? Or perhaps as an AI DJ app? These are precisely the reflections intended to be generated by sharing this type of diegetic prototype.

Debate And Reflect

With the dissemination of the diegetic prototype, the final stage of the process focused on fostering reflection and dialogue. The main goal was not to find a definitive answer, but to open up space for critical debate about the implications of the proposed musical future. Key questions such as the viability of these functions, their impact on Spotify or Apple Music, or the emergence of new roles like an AI DJ, were left open for discussion. This final step underscores the provocative nature of design fiction, aiming for the vision of the future to inspire new questions and conversations. With this, real solutions and proposals for the real world are generated, presenting ideas not too distant from the near future or provoking more questions for the more distant future, anticipating with solutions that can begin in the present.

Results

Debate Provoked

The diegetic prototype sparked critical conversations. It invites deep reflection on the future of AI and music. This prompts the audience to actively consider technology’s implications.

Credible Future Vision

A tangible, convincing AI music experience was presented. The proposed future became understandable, relatable. Audience visualized interacting with this musical reality.

Exploration of Implications

The project opened new questions about creativity and personalization. It also explored AI’s impact on other domains. It fosters broader thinking about how technology redefines our relationship with art and daily life.

Methodology Validated

Design fiction proved a powerful tool. It allows exploring complex futures and generating relevant dialogue. This confirms its value for strategic anticipation and co-creating desirable futures.

Different challenges, same drive